|

We use the general term assessment to

refer to all those activities undertaken by teachers -- and by

their students in assessing themselves -- that provide

information to be used as feedback to modify teaching and

learning activities. Such assessment becomes formative

assessment when the evidence is actually used to adapt the

teaching to meet student needs.

|

|

Assessment |

Home > Assessment

Important Things Which I Assess

Students studying HCID are required to do an in-course assignment which is the main part of a summative assessment. The assignment is group based because areas such as human factors methods (e.g. hierarchical task analysis) and usability evaluation are better approached in a working team (or group). The team based approach encourages simulation of real life scenarios and students can practice on each other. So if I had to list some of the things I believe to be important in HCI assessment, one of them would be the ability to work in groups. It is virtually impossible to assess students' understanding of evaluation techniques if they are not working in teams because essentially they would have to share skills in a real life environment in order to approach a full scale evaluation of a system in conjunction with real world users. Alternatively, if using real world users were not possible in a typical undergraduate environment, then they would still need to work together to simulate given users/ real world evaluation in an assessment by effectively evaluating or testing each other as target users.

It follows from this that an understanding of evaluation techniques is an area I consider to be important so when I assess students I wish to find out 'how' they have arrived at a conclusion regarding their evaluation of a system or interface, 'what' they have discovered and whether they have made logical sense of it. These aspects need to be assessed whether the type of evaluation is a qualitative heuristic based or quantitative, goal driven evaluation/test.

In conjunction with this the other important area is a sense of really knowing the user; in other words I wish to see that students understand their users' tasks and the context in which they work, play or live, that they are fully able to analyse routine or non-routine problems and tasks associated with target users.

The above areas all combine in the area of design which is the other main area I consider important. If students know their end-user, use group work effectively to evaluate and test as their project proceeds; can specify and plan using an appropriate tool such as storyboarding, can recognise, evaluate and design elements to produce appropriate metaphors with consistent affordance for target users, then they will have achieved, constructed or recognised a working usable product. So the ability to specify, plan and design appropriate, user-centric interfaces with good affordance is another feature I assess. This is illustrated in Figure 11.

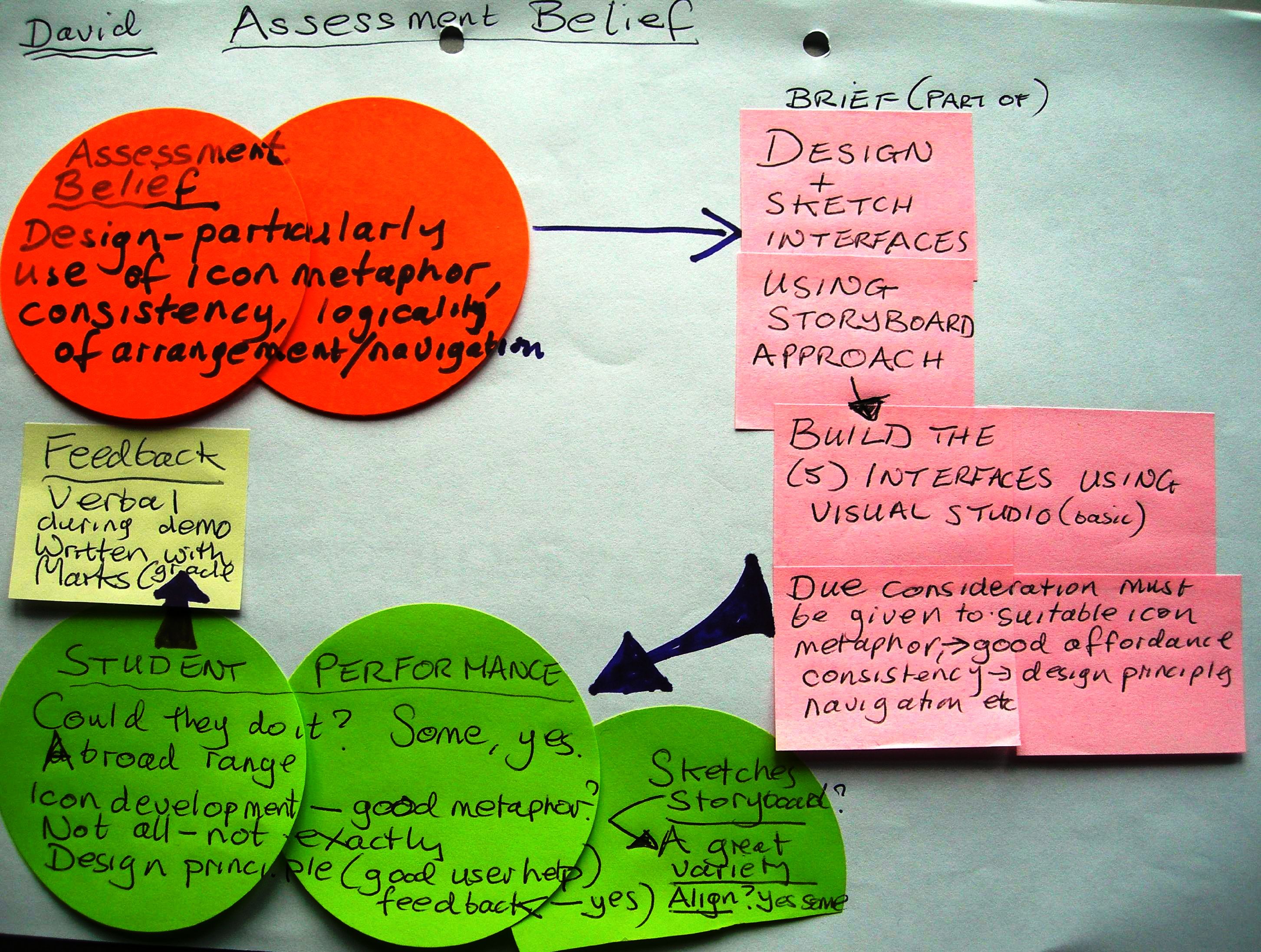

Fig. 11 Artefact. Assessment Belief

Assessment Beliefs and Feedback

Figure 11 demonstrates my assessment belief and is another map deliberately constructed within set, short time constraints during a Disciplinary Commons meeting. I have chosen to present this as an artefact (like the other maps shown elsewhere) rather than tidier screen drawn illustrations because the limited time frame again forced me to pinpoint what is important to me in terms of assessment. So group work, evaluation, knowing the user and planning should all combine to produce a well designed product with consistency, good use of metaphor, logicality of arrangement/navigation and affordance. In order to do this, students are given a brief which includes a requirement to design and sketch interfaces using a storyboard approach and build the interfaces using a Visual development environment.

In fact, if I were to repeat this map, I would include the aspects of group work, user-centric analysis and evaluation discussed above. The assignment itself was a case study based on a Health Product company and it included all these things (and the assignment requirements are discussed further below). The map also shows student performance, basically: 'could they do what was expected of them'? It illustrates that there was a broad range of skill and understanding particularly in the area of knowing the user through appropriate metaphor and instruction; planning the design with user related storyboards and evaluating with appropriate principles. Feedback was verbal and immediate during a demonstration of their system and written feedback was given with marks at a later stage.

Another aspect of assessment which in my view is crucial is a formative and summative approach.

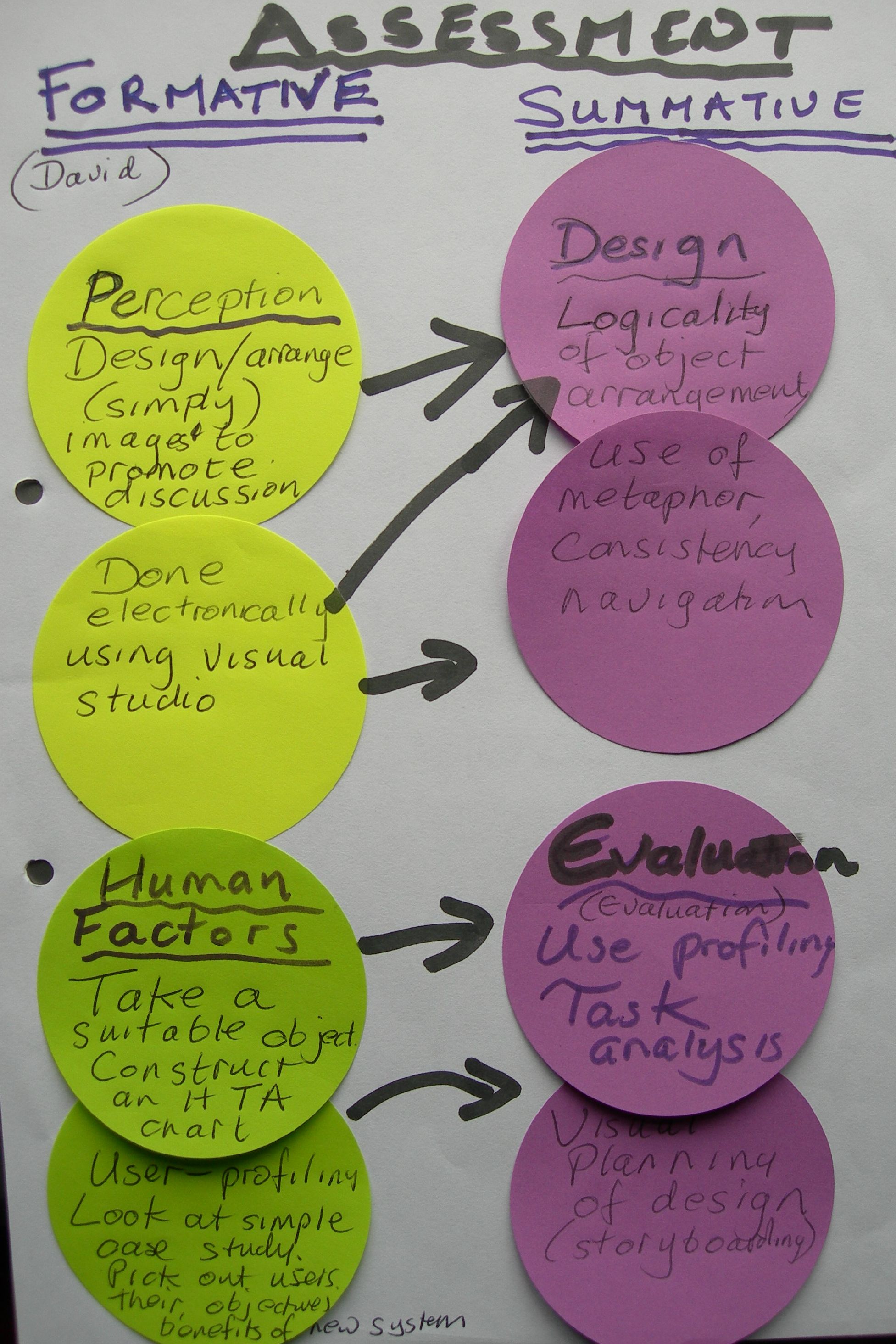

Fig 12 Artefact Formative and Summative Assessment Map

Figure 12 is another map constructed within a brief time frame and illustrates the importance of these two types of assessment and how they link together. The map indicates how formative areas of assessment within this module included a practical exercise on perception which involved looking at unusual images, discussing them, capturing and manipulating them within Visual Studio (see Contents section and particularly Figure 5). This was assessed verbally immediately following the construction of a simple interface containing the arranged images. A human factors exercise was also used for formative assessment. This involved task analysis based on the use of a suitable student hand-held object. Students were asked to select a goal based on this object and to construct an HTA chart on a flip chart based on this overriding goal (see Fig 6 and Content discussion). This was collected, checked and handed back to the students a week later with quick written and verbal feedback.

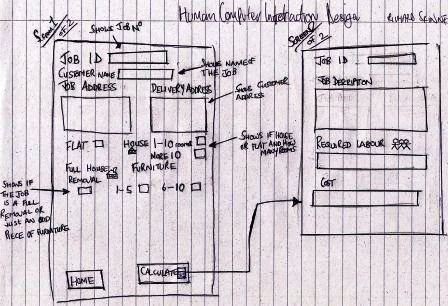

User-profiling and storyboarding were also done as small group practical exercises and the storyboard approach (based on an exercise involving the calculation of the complete cost of furniture removals) is partly illustrated in Figure 13. This exercise required students to consider various factors involving moving furniture from house to house for a fictional removal company (size/amount of furniture, distance for removal, number of stairs/staircases, size of house/flat) and to construct a storyboard which would show screens required for the calculation of amount of labour and total removal cost.

Fig. 13 Artefact. Formative assessment. Student constructed storyboard for Moving House

The artefact in Figure 13 shows the result of storyboarding by a pair of students who grasped the idea of this form of planning quite quickly. As with all of the formative assessments described above, students were provided with either immediate verbal feedback (as was the case with this example) or brief written feedback within a week. The example shown and the accompanying formative assessment generated the confidence to carry out assignment based tasks which were carried out at a later date and assessed summatively. In other words the formative assessments helped provide students with the skills and assurance to deal with the summative assignment requirements later in the semester (period). The map in Fig 12 could be extended to include other aspects of formative assessment which underpin the summative assessment used in the large case study. In particular, usability evaluation/testing and planning using a storyboard tool as in Fig 13 would be included if I constructed the map again.

Figure 14 artefact shows the official learning outcomes for the summative HCID assignment. These are placed on the front cover of all assignment briefs and it is interesting to note that these outcomes are reflected in my discussion on what is important to me in terms of assessment. They are not a set of outcomes which over time have continued to relate well to the course module but they do complement and address the important aspects which I wish to address (described above). If I were to change the unit descriptor, I would modify the outcomes to reflect interaction with any digital artefact and in fact this has been done for a newly revalidated module which is similar to HCID.

Fig 14 Artefact. Learning Outcomes Assessed

Learning

Outcomes/Objectives Assessed:

[Note: Cross referenced with the Unit syllabus]

Knowledge and Understanding

1. Identify the application, issues, design and functionality of the

human computer interface in the context of interaction with a software

model

Cognitive skills

2. Analyse non-routine problems in the context of human computer

interaction, assessing the suitability of proposed solutions

3. Specify, design and evaluate elements of a prototype interface with

functioning application.

Practical and Professional skills

4. Use appropriate visual environment to build and test elements of a

prototype interface with functioning application.

Transferable Skills

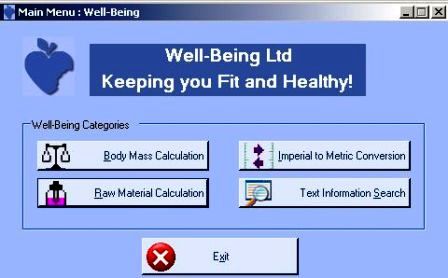

As indicated above, the summative assessment was an in course assignment consisting of a case study relating to a Health Product company. It required student teams to focus on the areas which I consider important to assess and included the following requirements: an evaluation of the Department of Health website; followed by the planning of their own fictional Health company system with user-profiling, user centric task analysis, and storyboard tools, evaluating their system quantitatively and qualitatively; building the system in a Visual development environment with due consideration given to use of metaphor, affordance and design principles of their choice and final evaluation/testing of their system. Figure 15 artefacts shows some screen shots of their ongoing design for the Health Product company.

Fig 15 Artefacts: Samples of student design. Screen shots of Health Interface

These were captured during and following construction of their system so the first artefact (Fig. 15) is in fact literally a digital photo of a monitor with a student system in development (hence the slightly awkward image). The second artefact (also Fig. 15) was a screen shot captured in the normal way. Both demonstrate that students have given due consideration to use of metaphor, affordance and logicality of design. Both also (though this is not shown) had achieved consistency of design throughout a series of pages and the lower one (above) particularly demonstrated that they were aware of their users needs and task goals.

Figure 15 does also demonstrate that the formative work and assessment done earlier in the module helped underpin this summative work and enabled students to fulfil the summative requirements. However, If I were to use this particular assessment again, I would focus students on (and possibly weight) the human factors methods more. The above designs were good and reflect good practice but in some cases similar designs were done at the expense of the user. In other words, students achieved good logicality of arrangement and navigation but did not appear to know their users and consistency, the use of metaphor and general affordance reflected this.

References

Black, P., & William, D. (1998). Inside the black box: Raising standards through classroom assessment. Kings College London Publishers.